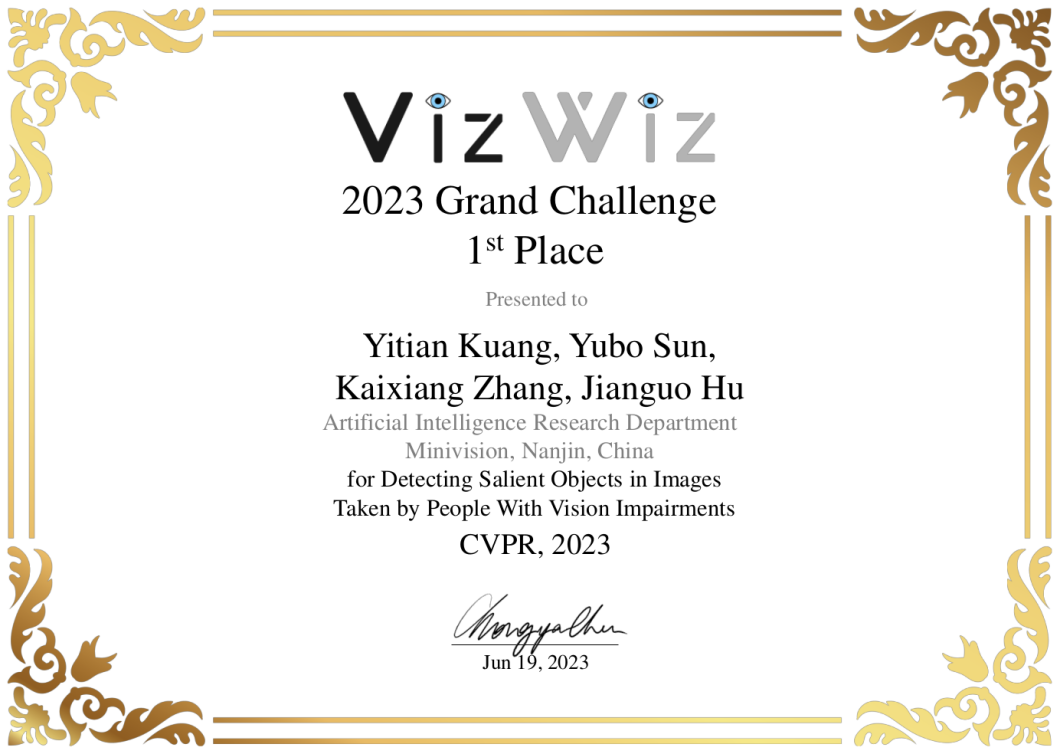

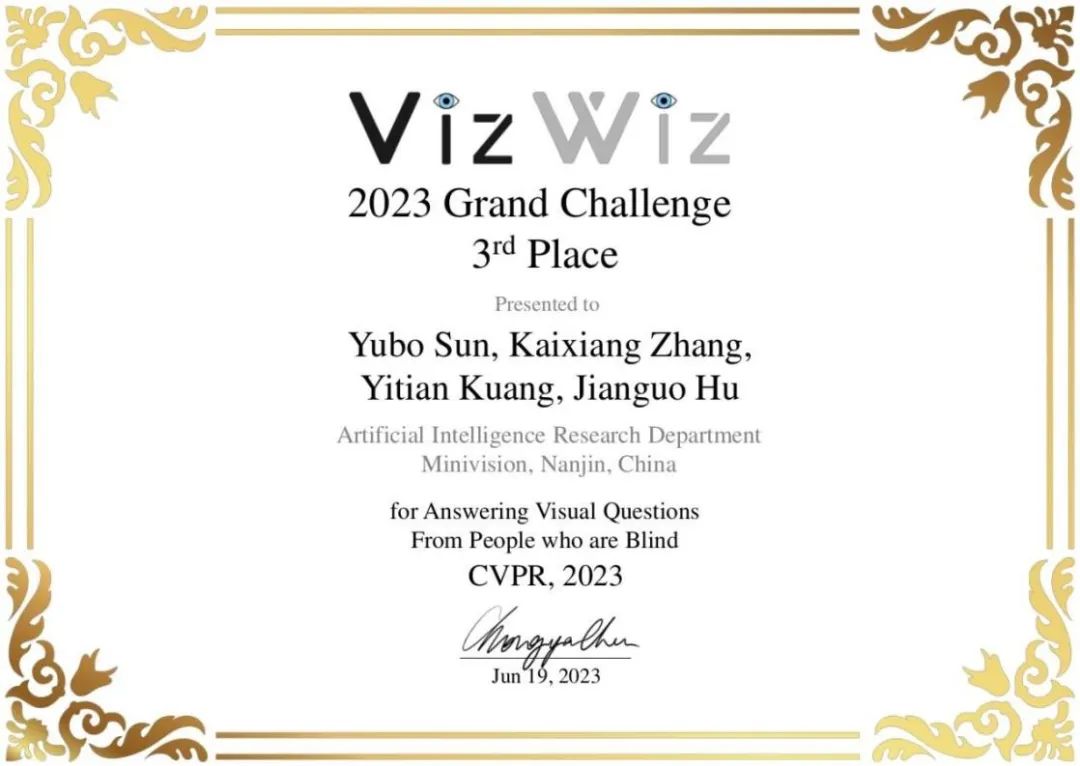

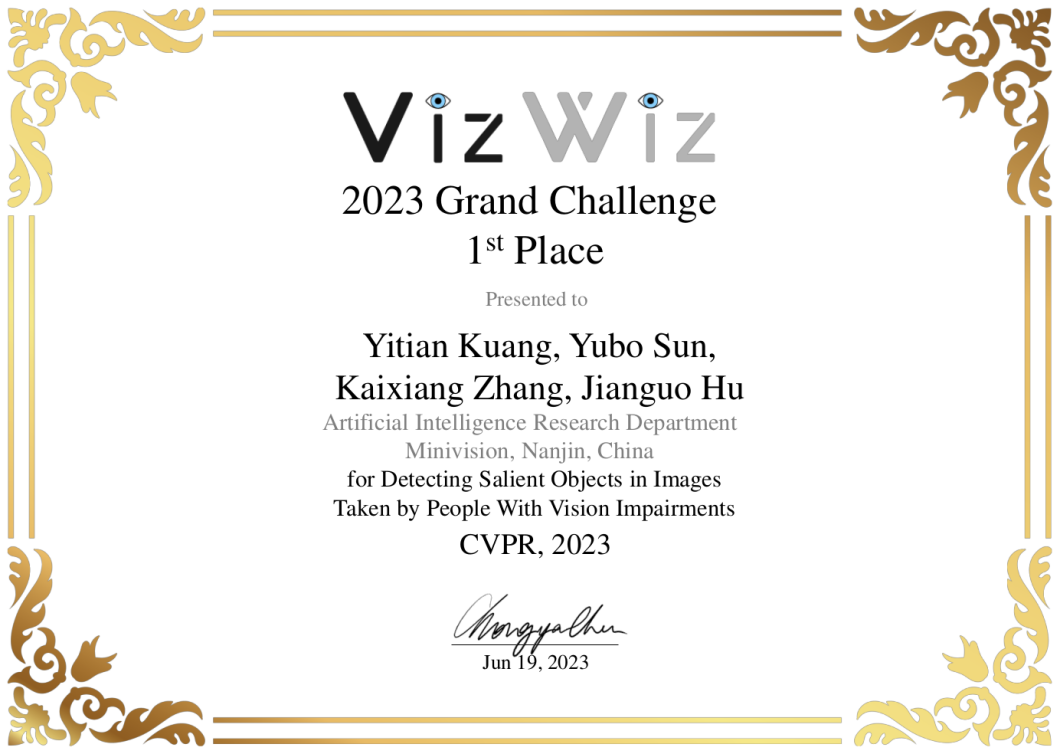

Recently, the results of the CVPR 2023 VIZWIZ Grand Challenge Workshop competition were announced. The Minivision Technology team won first place in the saliency target detection track and third place in the visual Q&A track.

01 VizWiz Grand Challenge Double Track Wins "One Championship One Season"

CVPR is one of the top three computer vision conferences in the world, hosted by IEEE and renowned as the "Oscar" in the field of computer vision. This VIZWIZ Grand Challenge Workshop is an international competition jointly organized by Microsoft, Apple, and the University of Colorado at Boulder on CVPR.

This competition aims to address the needs of visually impaired individuals by improving the accuracy of visual algorithms to meet their needs for perceiving the surrounding environment, thereby promoting collaborative development of assistive technology algorithms in the computer vision community.

02 Significance Target Detection Track Minivision Technology Champion Plan

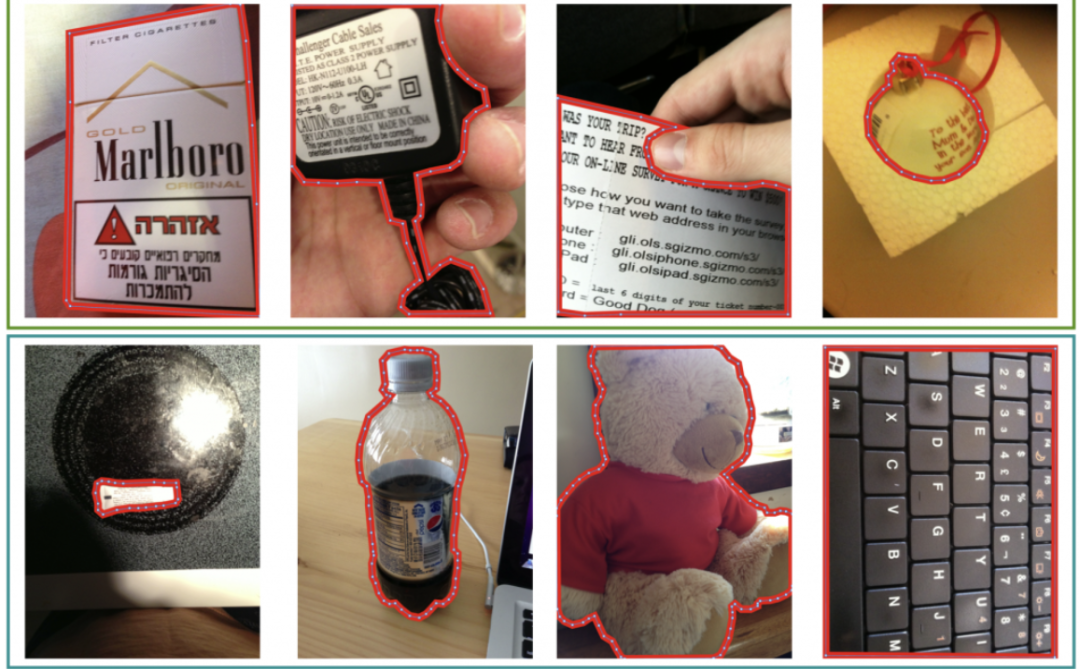

Significant object detection is an important task in the field of CV, aimed at identifying the most significant target object or region in an image, allowing computers to understand which parts of the image are the focus of attention.

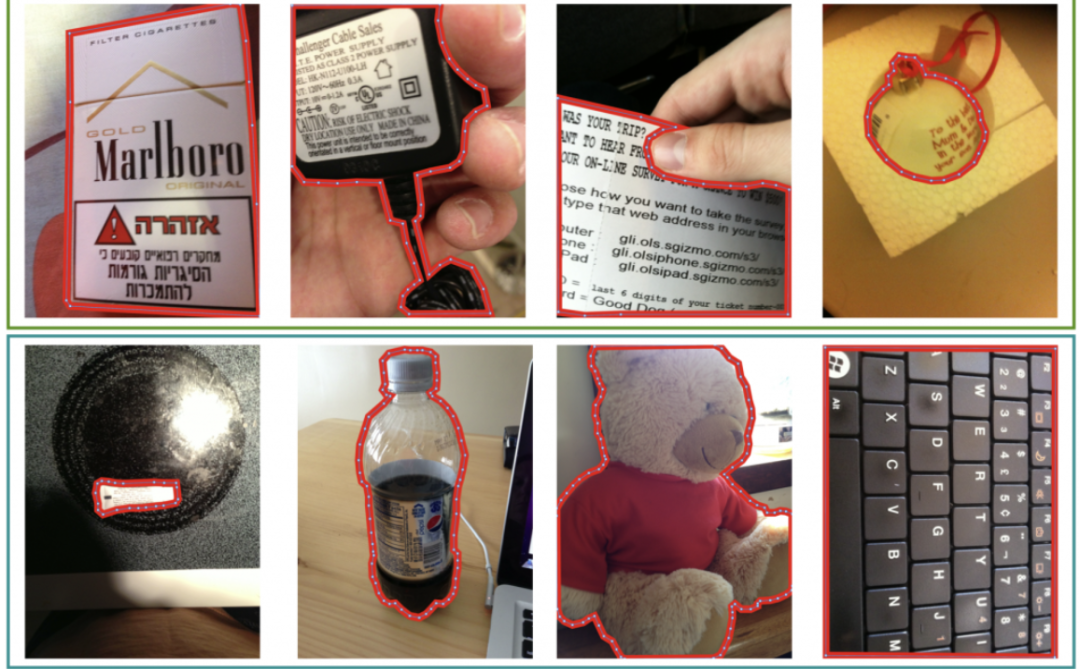

The official dataset, all images taken by visually impaired individuals

The official dataset includes 19116 images from the training set, 6105 images from the validation set, and 6779 images from the testing set.

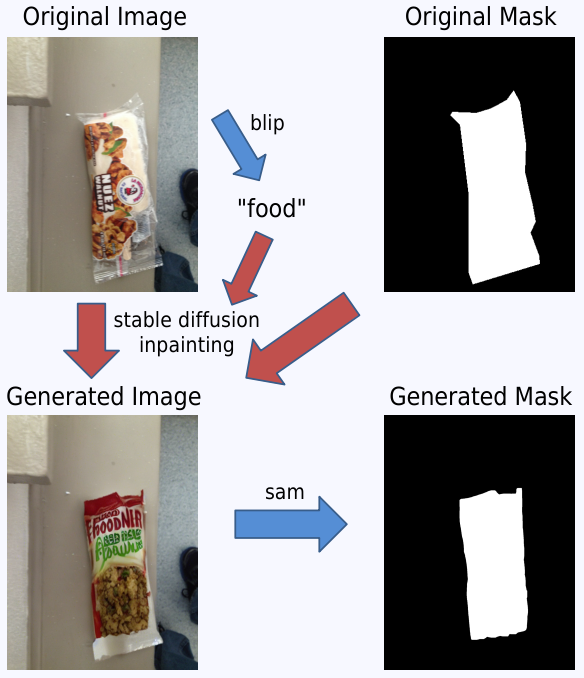

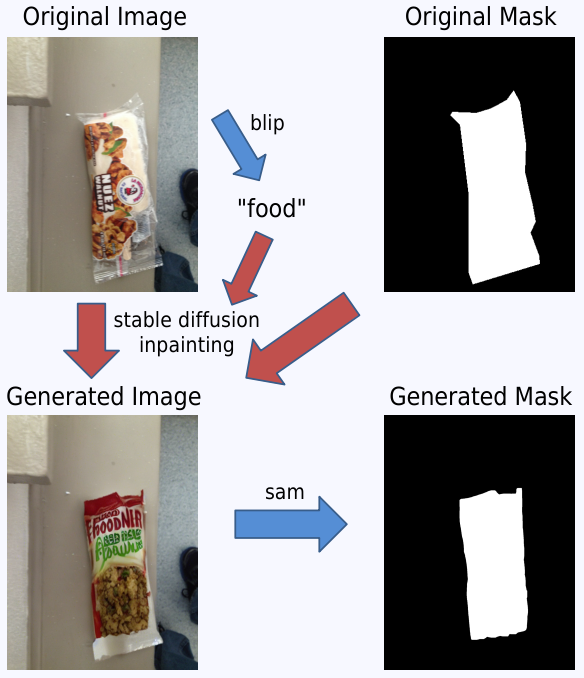

We use a multimodal model to predict the description of the salient image, as shown in the following image, food. The diffusion model generates the target at the corresponding position based on the description, and then segments it to obtain the mask corresponding to the generated image. This method can effectively generate a large amount of high-quality data and increase the diversity of the data.

生成图像及其mask的流程

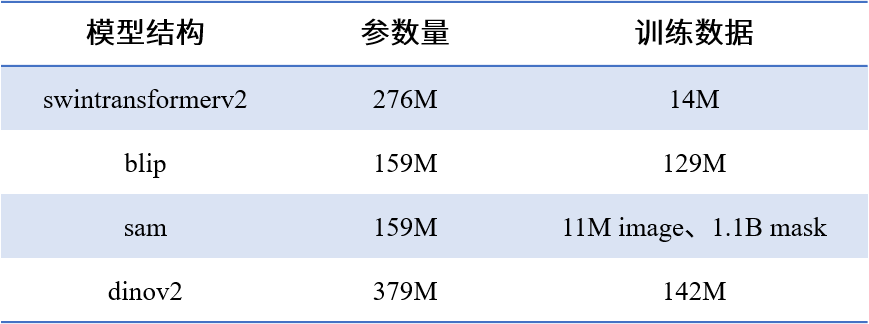

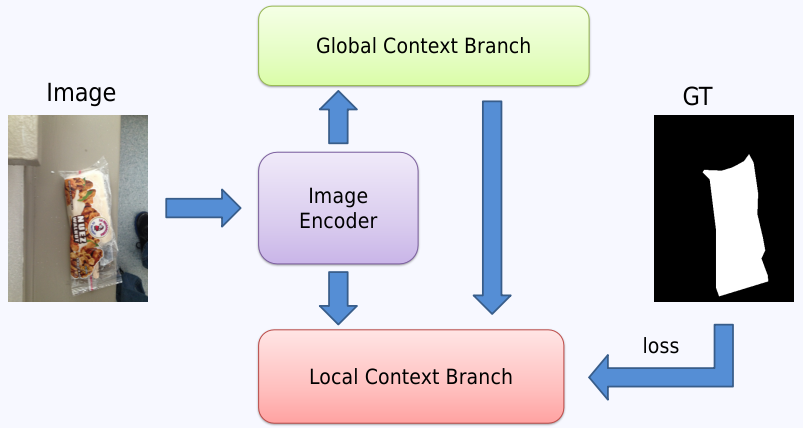

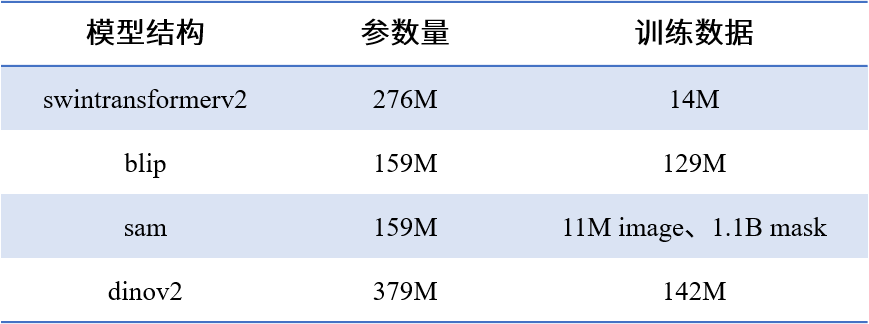

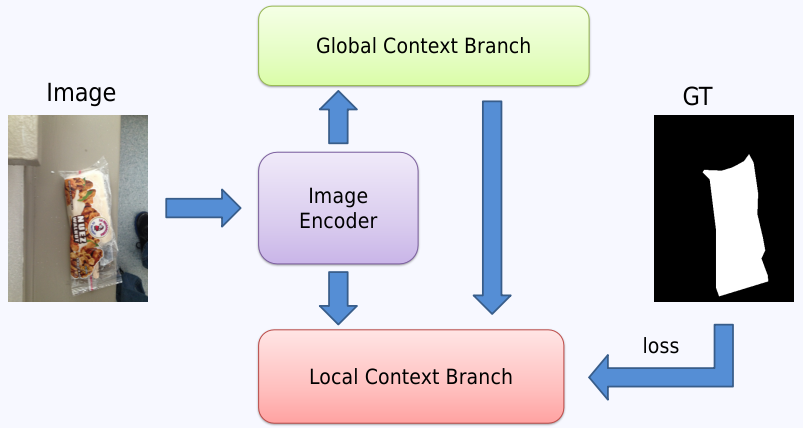

We used SelfReFormer as the basic framework and improved the feature extraction ability of Image Encoder by using pre trained weights of visual large models.

Comparison of Image Encoder Parameter Values

SelfTransformer model architecture diagram

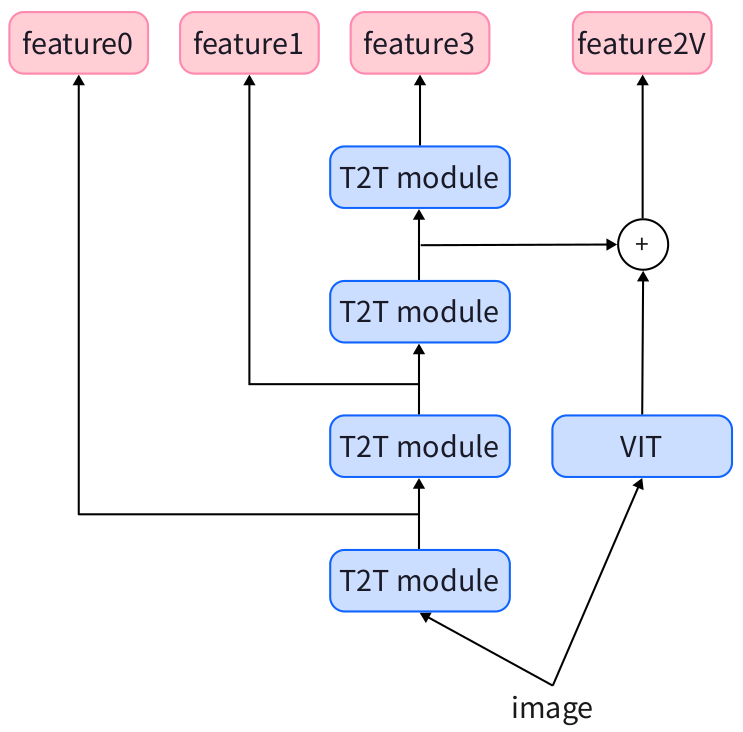

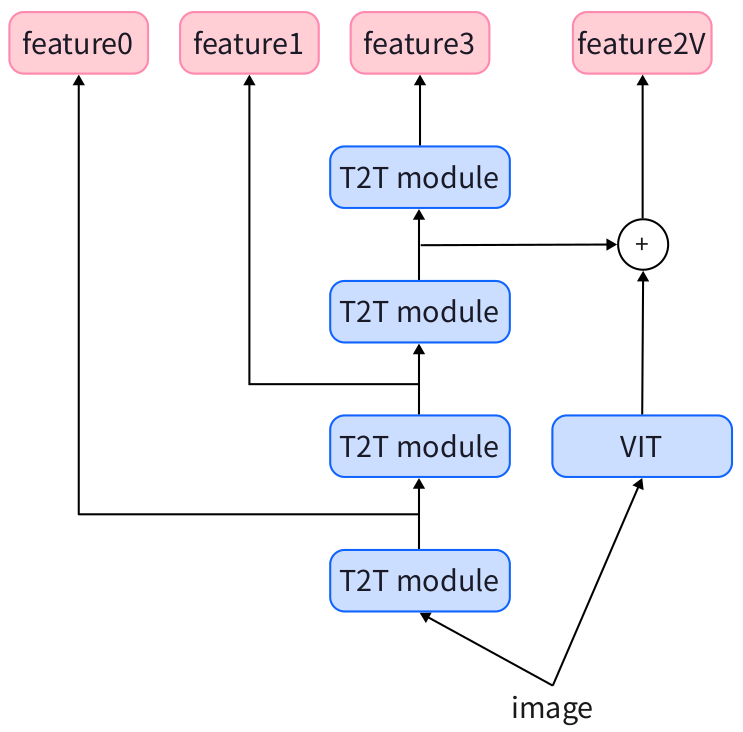

By incorporating the T2T module for transformation, multiple scale features are fused, increasing the model's ability to identify significant targets and bringing stable growth points.

Multiscale schematic diagram of T2T module

In addition, we utilized a voting mechanism for model selection and ultimately fused the results of two encoders, SwinTransFormerv2 and Dinov2. Other trips, such as binarization threshold adjustment and corrosion operations of different sizes, will have slight fluctuations.

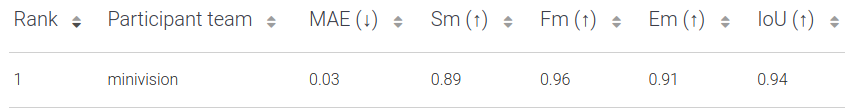

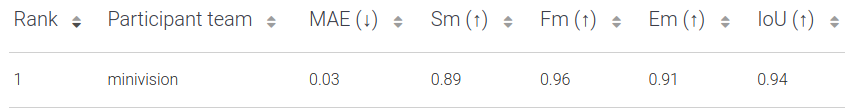

Our plan ranks first in three indicators: Sm, Em, and Iou, with a total ranking of first.

03 Visual Q&A Track: Xiaoshi Technology's Third Place Plan

The visual Q&A task requires the model to understand the semantics of the question, the visual content of the image, and the relationship between the two, and answer image based questions.

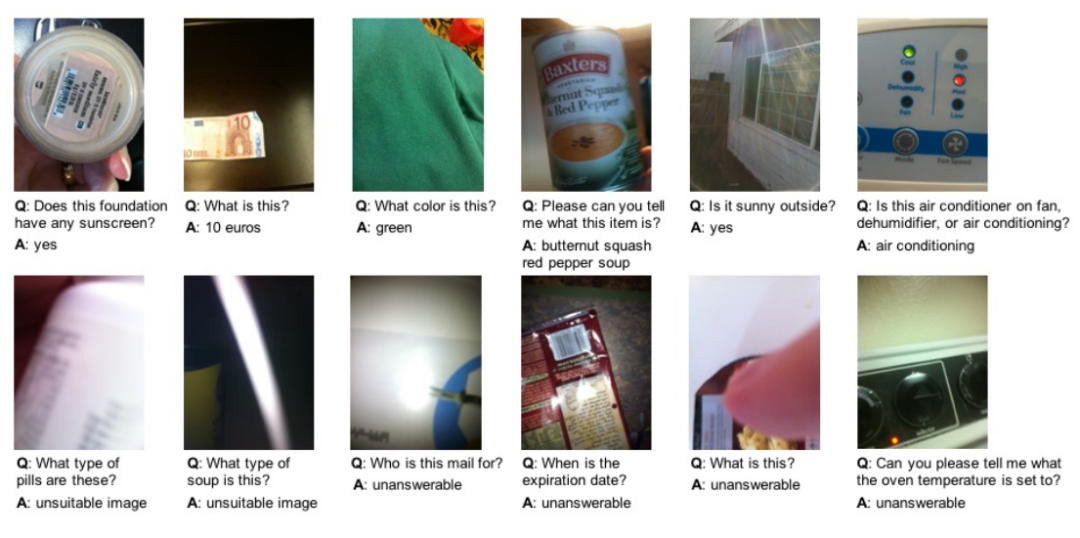

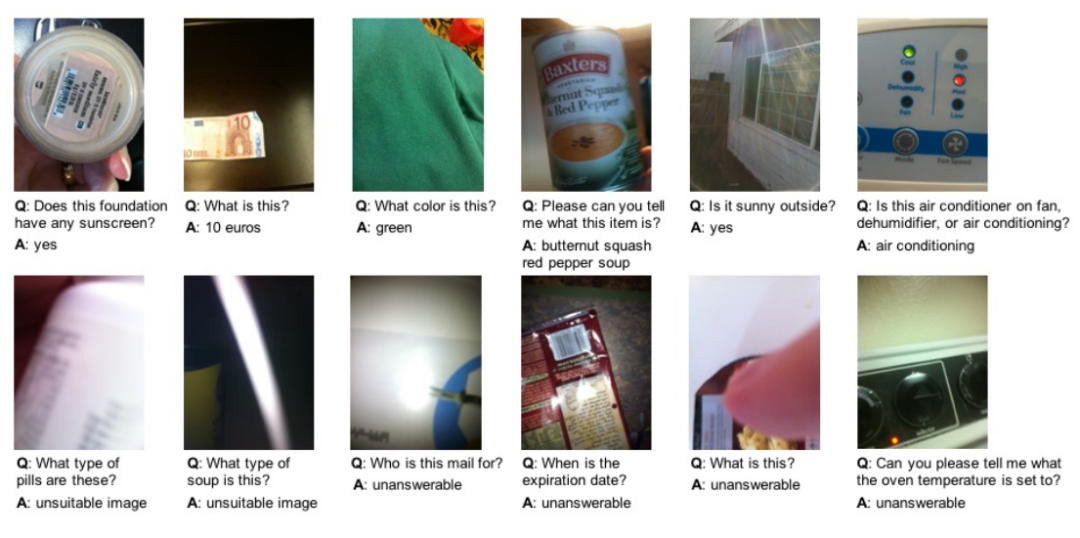

In the VIZWIZ VQA dataset, 10 responses were collected for each question. The collected images have incomplete, blurry, or unclear shooting scenes, and the collected questions and answers are also more colloquial.

The official provided 20523 image question pairs as a training set, 4319 as a validation set, and 8000 as a testing set.

官方数据集

In terms of data augmentation, we use a similar approach to the significance track to increase data diversity, and use a multimodal large model to generate corresponding question answer pairs, effectively expanding the image and corpus data.

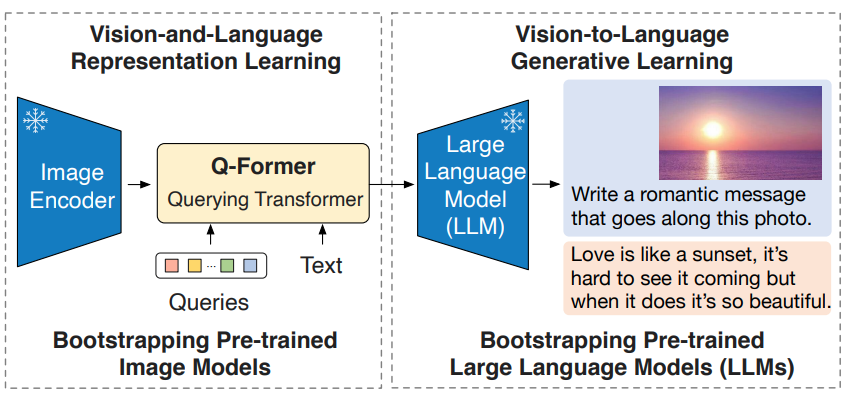

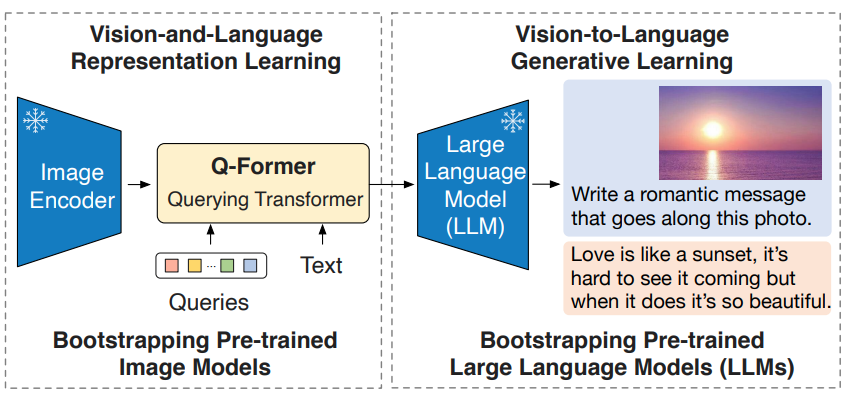

We use Blip2 as the basic framework. Prompt enhancement was performed on the corpus, with fine-tuning of the Q-Transformer part that combines visual and linguistic models. The LLM part was adapted to predict the answers of multiple large models such as T5, VIT-L, and VIT-G. Finally, the prediction results of multiple models were fused based on a voting mechanism.

Blip2 model architecture diagram

In this competition, we not only enriched our technology stack in the field of visual large models, but also improved the accuracy and scene generalization of production algorithms in object detection, tracking, classification, and segmentation tasks based on the powerful feature representation and pattern recognition capabilities of large models, accelerating our algorithm's landing and practical application capabilities in various complex scenarios.

At the end of the competition, the Minivision team fully demonstrated their technical hard power in front of global AI developers.

After receiving the good results of "one crown, one season", the Minivision team hopes to explore more landing space for the development of computer vision technology, especially for the application of large models, and accumulate more technical reserves for AI to benefit smart cities, industrial production, robotics and other fields.