With the brilliant application of artificial intelligence in various fields, deep learning has become a vocabulary that can be heard on the streets and alleys. However, as the network deepens, data becomes larger, and training takes longer, how to accelerate the network while ensuring accuracy, and even train and test the network on CPUs or mobile devices, has become an urgent issue.

Researchers from the AI technology team of Minivision Technology have published a paper titled "Performance Guaranteed Network Acceleration via High Order Residual Quantization", proposing a new method for compressing deep networks while ensuring network model accuracy. The experimental results showed that they reduced the size of the network by about 32 times, resulting in a 30 times increase in speed. Although previous methods have achieved similar results in terms of volume and speed, the method proposed in this paper outperforms others in terms of accuracy assurance.

New Compression Method HORQ: Accelerating Network Computing while Ensuring Accuracy

In addition to network pruning, network sparsity approximation, etc., network binarization is also a common network acceleration method. Usually, we use+1 and -1 to replace the original floating-point values, making the multiplication operation in convolution an addition and subtraction operation. If the input and weights are binarized at the same time, the multiplication operation will become an XOR operation.

This may seem like a reasonable network compression method, but if the threshold binarization method is simply used to binarize the network input, the final accuracy of the model will not be guaranteed. But if binarization is not used to accelerate the network, then the advantages in computing and storage brought by binarization cannot be utilized.

The HORQ (High Order Residual Quantization) method proposed in this article proposes a method for binary quantization of high-order residuals for inputs. It can not only utilize binary computation to accelerate network computation, but also ensure that the trained binary network model has high accuracy.

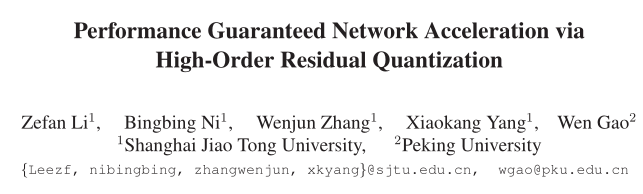

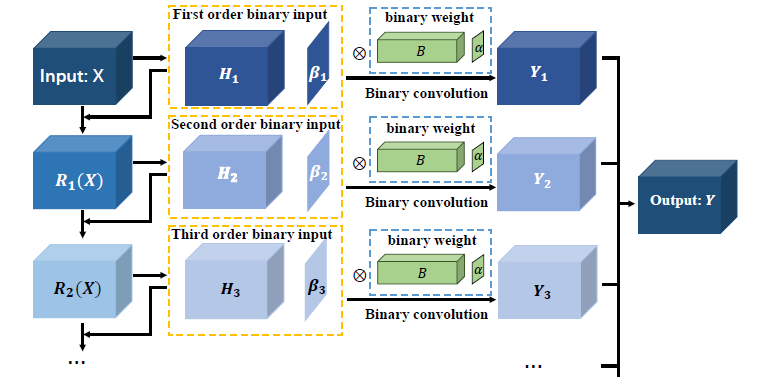

Figure 1 shows how to use the HORQ method to perform residual quantization on a regular convolutional layer.

Figure 1 HORQ structure

The previous binarization methods, such as XNOR, simply used threshold quantization for the input. This method can be seen as a first-order binary approximation method for floating-point numbers. On this basis, this article proposes a framework for HORQ using the recursive threshold quantization method. Specifically, as shown in Figure 1, after the first threshold quantization operation, we can define and calculate the residual corresponding to the modified order approximation, and then perform a new round of binary approximation on the residual of that order. By approximating high-order residuals, we can obtain binary feature maps corresponding to different scales. Add these feature maps together to obtain the final output.

experimental result

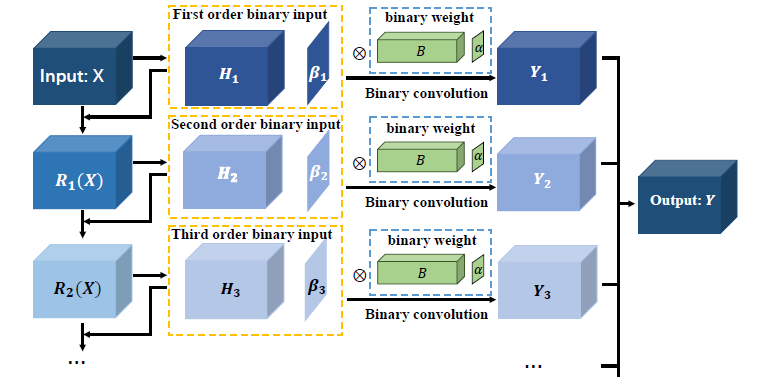

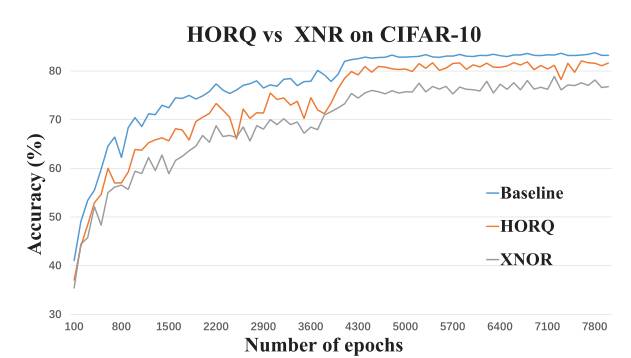

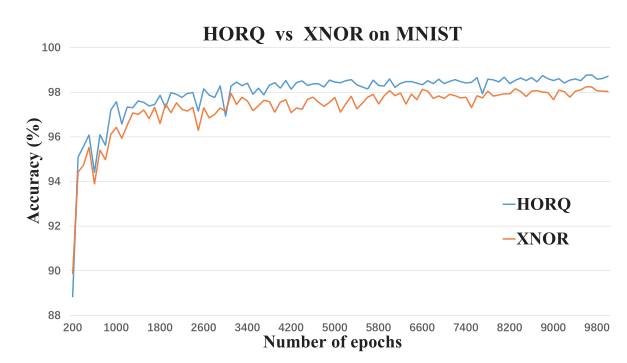

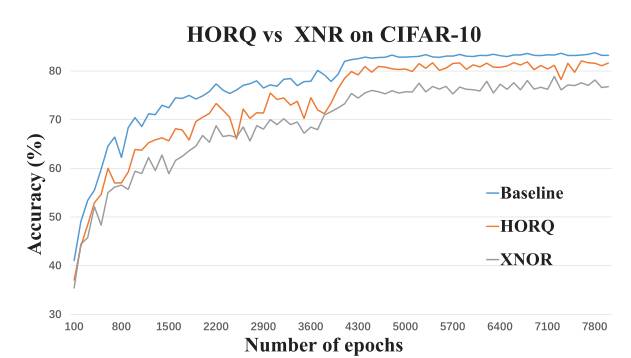

The experimental part of this article was tested on the MNIST and CIFAR-10 datasets, and it was found that HORQ-net has a delightful advantage over the previous method of simply using first-order threshold binarization for input:

Figure 2 MNIST Experiment

Figure 3 Cifar-10 Experiment

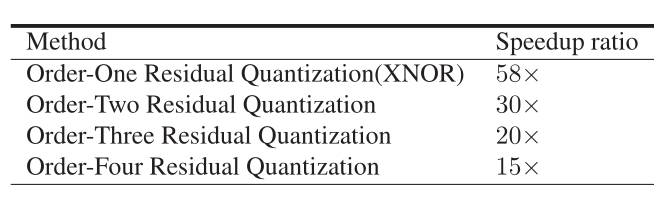

We found that for the second-order residual quantization method, it reduced the size of the network by about 32 times, while also achieving a 30 times improvement in speed. Compared to XNOR-net, it improved the testing accuracy on both MNIST and CIFAR-10, and demonstrated the potential for network training on CPUs.

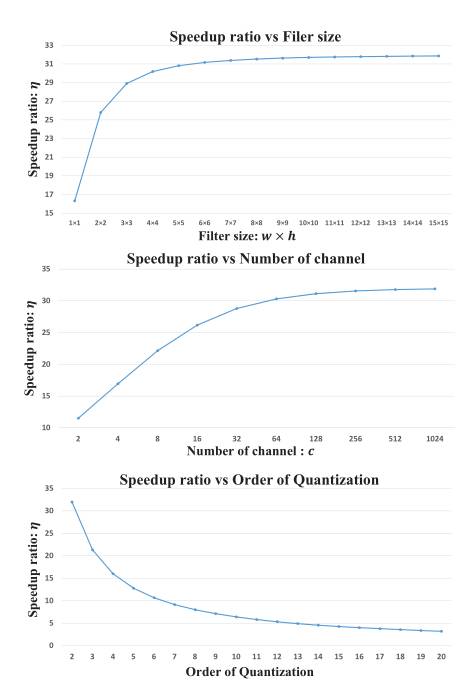

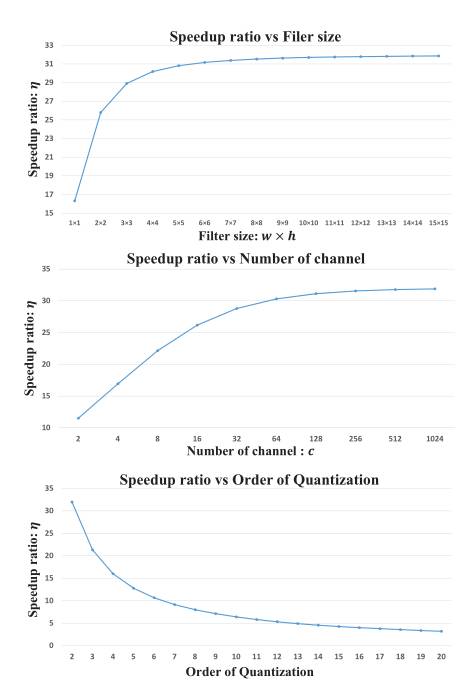

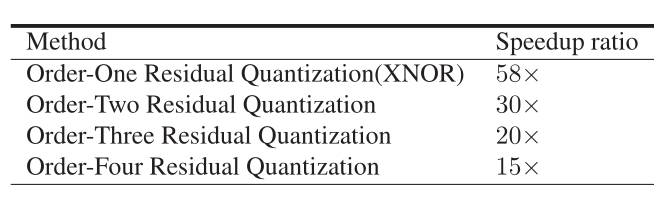

Figure 4 Performance Analysis of HORQ Method Acceleration Ratio

Figure 5: HORQ method acceleration ratio and quantization order analysis

The acceleration ratio of the HORQ method for convolutional layer calculation is greatly related to the size of the convolutional kernel, the number of feature maps, and the order of residual quantization. These relationships are reflected in Figures 4 and 5. Moreover, as shown in Figure 6, the storage space of the model based on binarization can be significantly reduced.

The HORQ method proposed in this paper can serve as a fundamental binary quantization method for input binarization in networks. While ensuring the accuracy of the network model, it can use binary quantization technology to improve the computational speed of the network. At the same time, the residual order can be adjusted according to actual hardware needs to meet requirements.

This method has great development and application prospects. For general deep learning networks, the HORQ method can greatly accelerate the computational speed of deep networks. Due to the binarization of inputs and weights at each layer of the network, the forward propagation time of the model is greatly reduced, and the space required for storing the model is greatly compressed, making it possible to run large-scale deep network models on resource limited small computing platforms such as mobile phones and laptops. In addition, the method of high-order residual quantization can ensure the accuracy of the network, so that the network will no longer experience a significant decrease in accuracy due to simple binary methods.

This paper has been accepted by ICCV2017 and was implemented by Dr. Li Zefan, the AI technical team of Minivision Technology, under the guidance of Professor Ni Bingbing, Professor Zhang Wenjun, Professor Yang Xiaokang, and Academician Gao Wen.

The related paper Performance Guaranteed Network Acceleration via High Order Residual Quantization will be published in ICCV, and details can be viewed at that time.