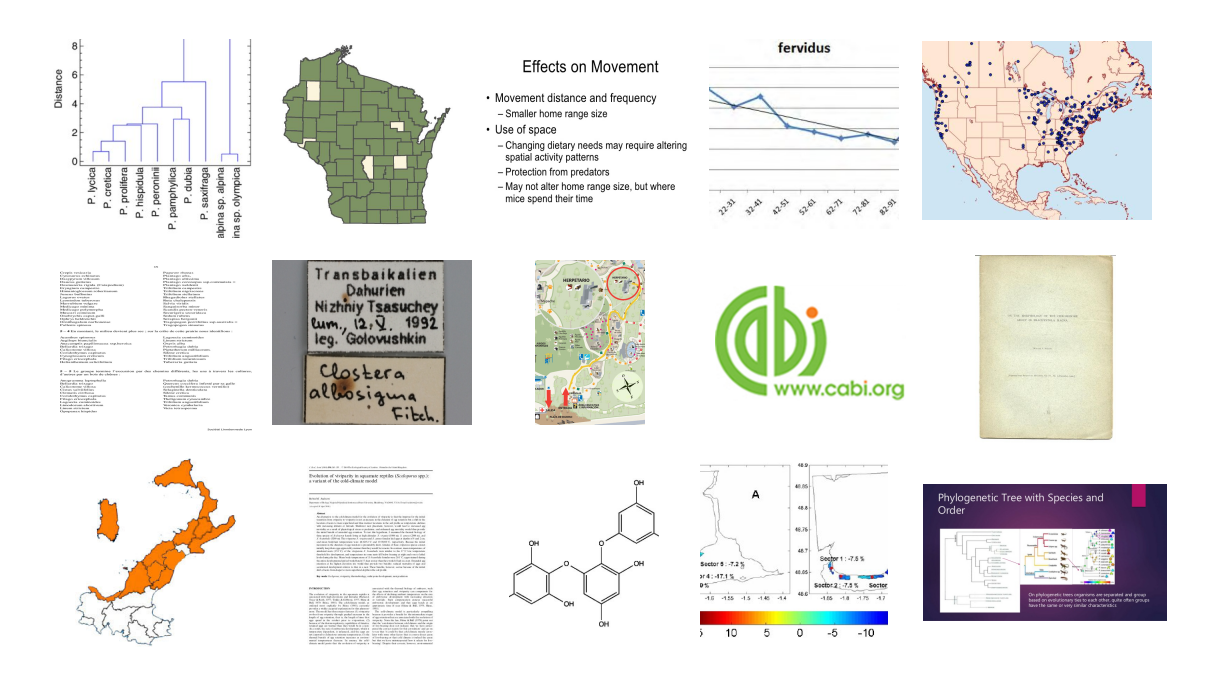

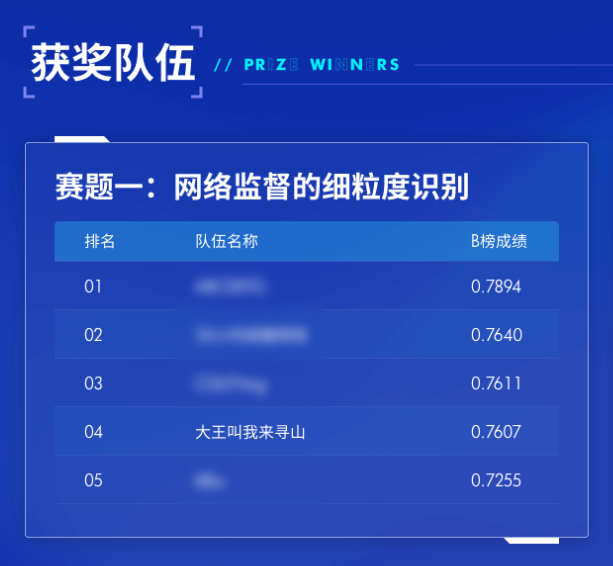

Recently, the final results of the ACCV 2022 International Fine Grain Image Analysis Challenge were announced. Minivision Technology participated in the network supervised fine grain recognition race, standing out among 133 participating teams and winning fourth place.

The ACCV 2022 Fine Grain Image Analysis Challenge is an international competition organized by Nanjing University of Technology and the University of Wollongong in Australia. The fine-grained image analysis involved in this competition aims to perform fine-grained image localization, recognition, and retrieval on over 800000 network images containing 5000 subcategories, which has wide application value in real scenarios.

In the process of AI production implementation, we also face the algorithm task of fine-grained scenarios, and how to improve algorithm accuracy is also a topic worth continuous exploration.

The Minivision award-winning plan has provided us with new inspiration, not only deepening our understanding of fine-grained recognition, but also promising to deepen the integration of production and research, and promote the implementation of algorithms.

1、 Technical Proposal

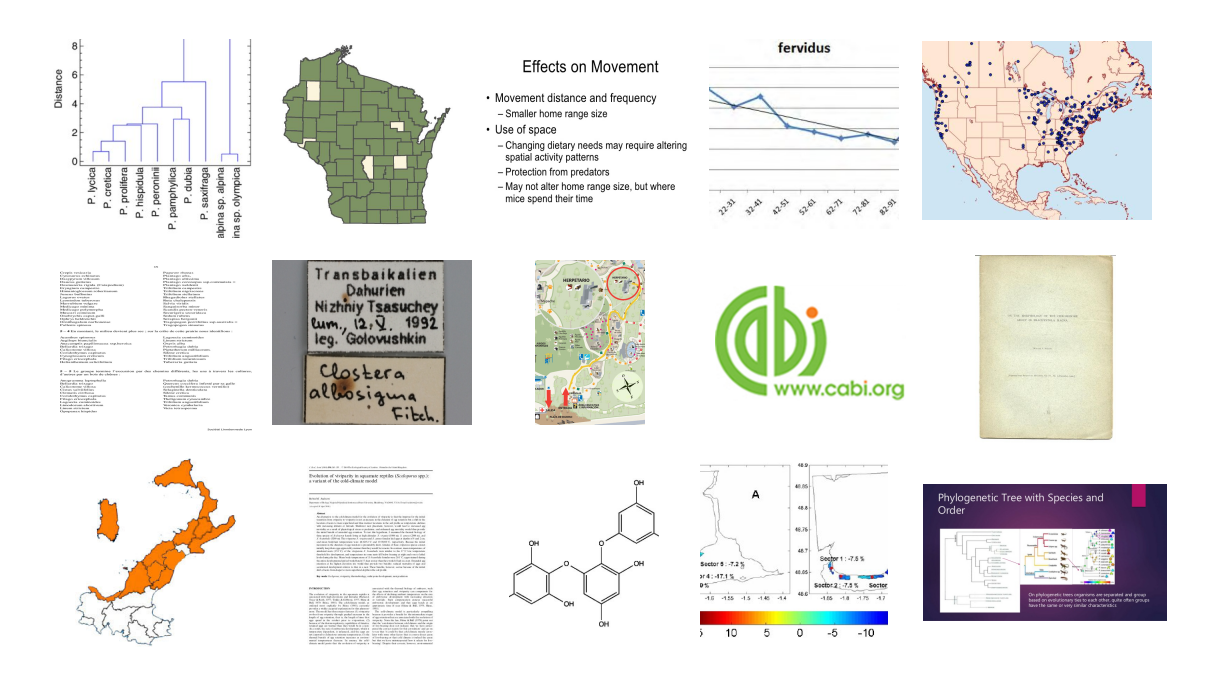

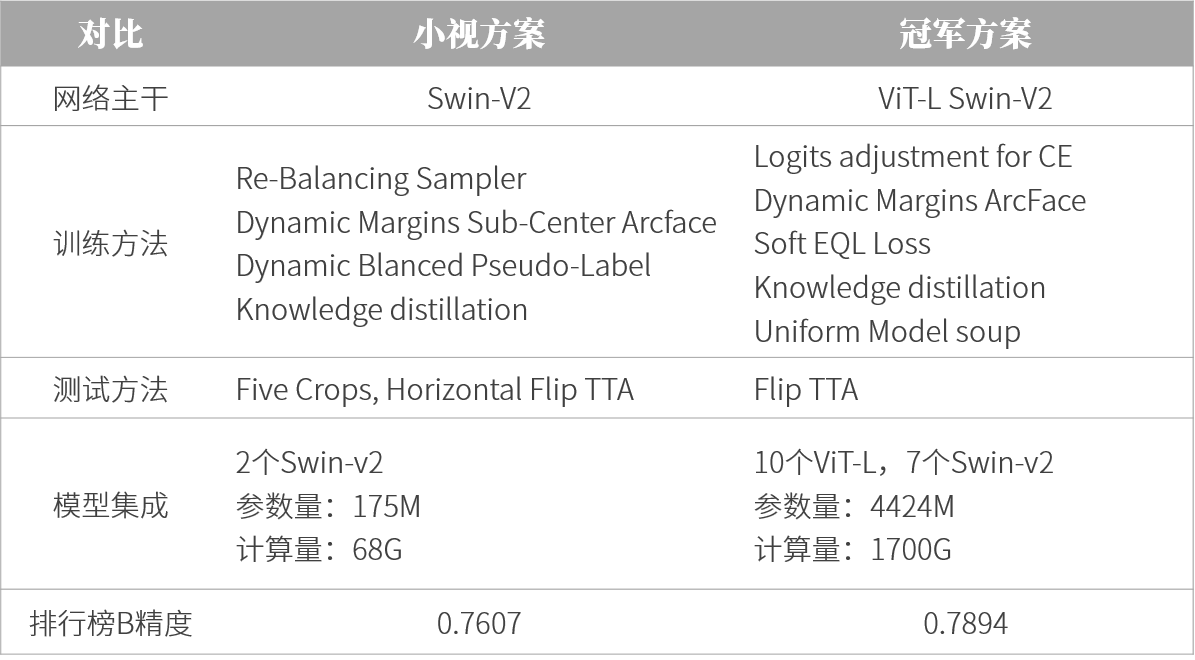

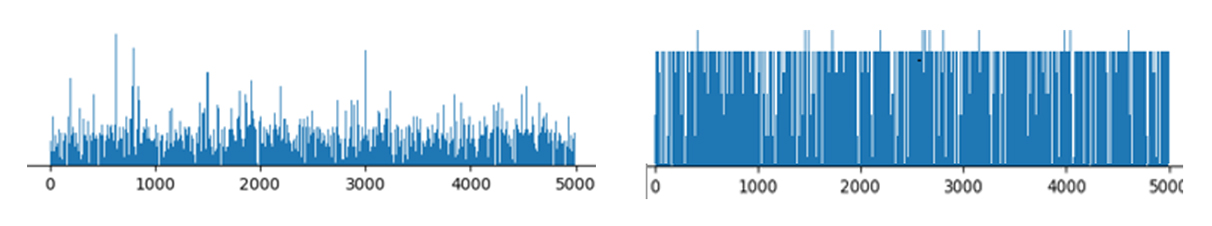

The data provided by the organizer contains a large amount of irrelevant noise data, such as charts, text, and maps, which undoubtedly affects the training results. We introduced a feature extraction mechanism to establish a noisy image feature library for feature matching, screened nearly 50000 noisy data, and improved the purity of the training set.

Selected noise samples

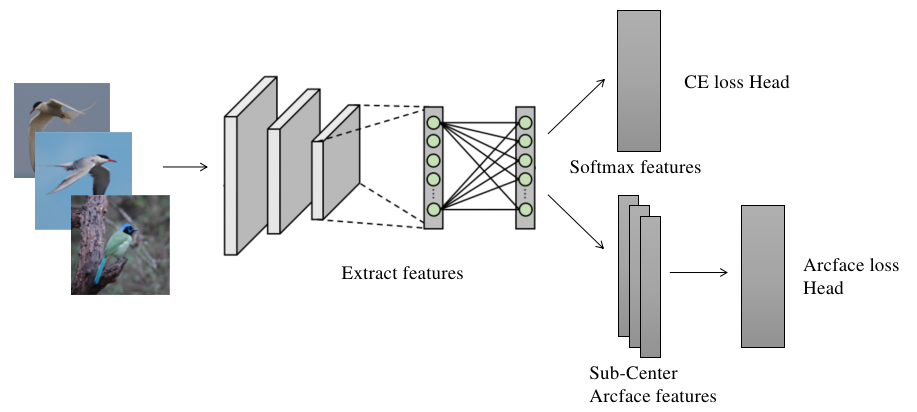

To address the issue of significant differences in target scale changes in the dataset, we selected SwinTransFormer as the backbone network, utilizing multi head attention and moving window masking mechanisms to significantly extract target features while balancing training speed and accuracy.

The training process adopts various data preprocessing methods, including Resize, Flip, Mixup, Cutmix, AutoAugment, RandomErase, to increase the generalization of the model.

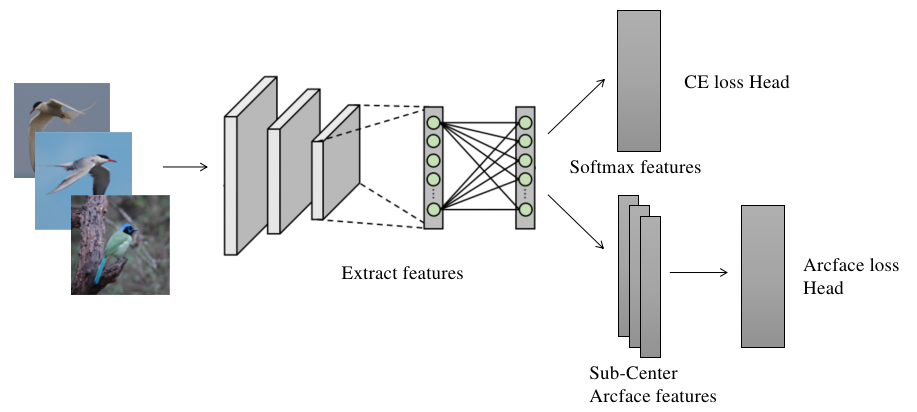

We have added a Sub CenterArcface loss function to assist in the supervised Fintune model, which distinguishes multiple class centers within a class, reduces the impact of noisy samples on the algorithm, and promotes further convergence of the model; Introduce dynamic Margins to pay more attention to categories with low data volume and reduce the impact of long tail effects. In the later stage of training, we found that the accuracy of the auxiliary supervision head exceeded that of the main head.

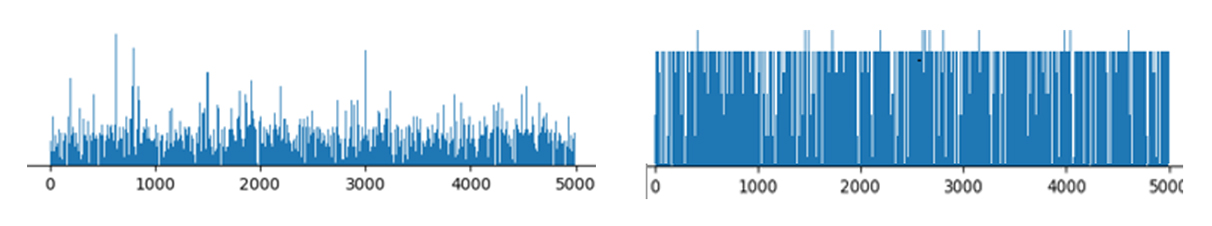

We have improved the data sampler by using Re Balancing Sampler to increase the learning of categories with low data volume. Through differentiated training of the model, we enhance the complementarity between the models.

By training the test set with pseudo labels and performing dynamic equalization processing, we ensure the fairness of the predicted categories. On this basis, self distillation is carried out to further improve accuracy.

In the testing phase, we used the TTA testing methods of FiveCrops and HorizontalFlip, which significantly improved the accuracy of a single model. Finally, two SwinTransFormer models were fused and the competition results were submitted.

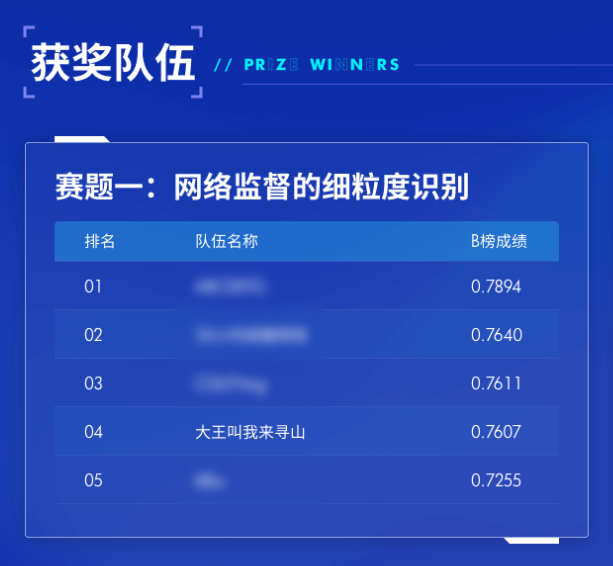

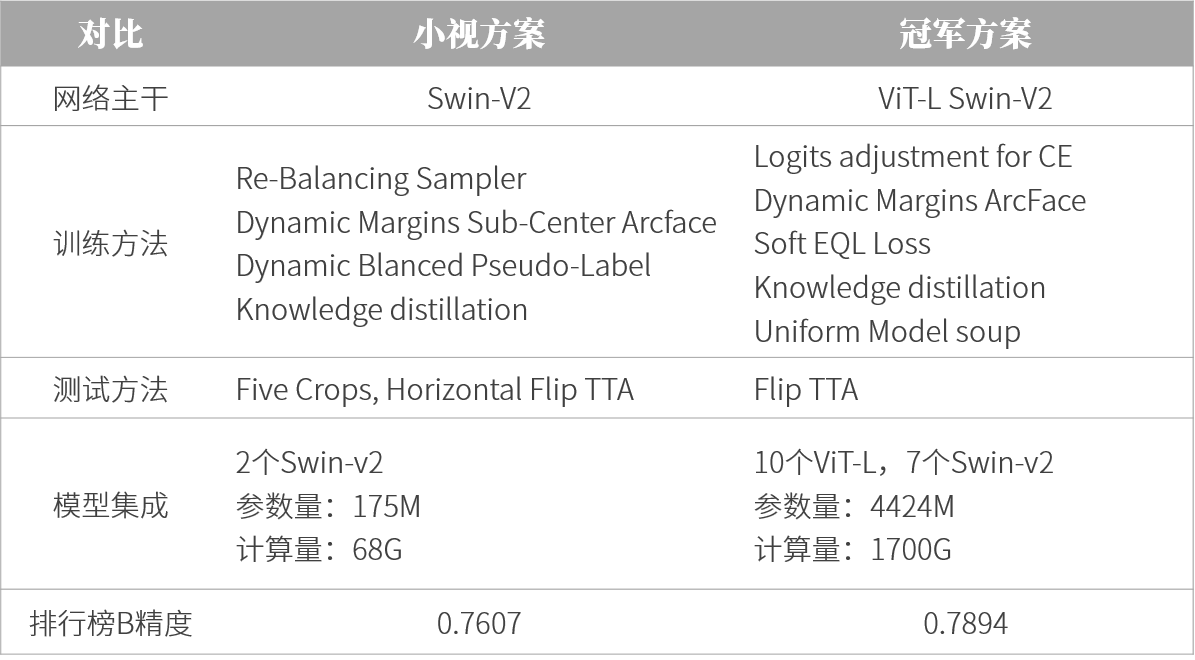

2、 Scheme comparison: Xiaoshi Scheme&Champion Scheme

We have chosen the technical route of dynamic margin and knowledge distillation for both the champion scheme, with a fusion model quantity of 2 and 17, respectively.

When the parameter quantity is 0.0395 times that of the champion model and the computational complexity is 0.04 times, the accuracy difference between us is 2.87%.

At the same time, the gap between us and the second and third places is within 0.33%. This demonstrates the efficiency advantage of our model, which can better achieve the conversion of production tasks

3、 Production application

This competition enriches our technical reserves in fine-grained classification scenarios, helps improve the accuracy of fine-grained algorithms, and promotes the implementation of video structuring in real scenarios such as campuses, construction sites, factories, etc., such as student running and fighting recognition, gas station smoking and phone warning, and factory sleeping behavior warning.